🔥Google releases TranslateGemma, and its pretty cool:

- New open translation models built on Gemma 3, available in 4B, 12B, and 27B parameter sizes

- Supports 55 languages with training on nearly 500 additional language pairs for further research

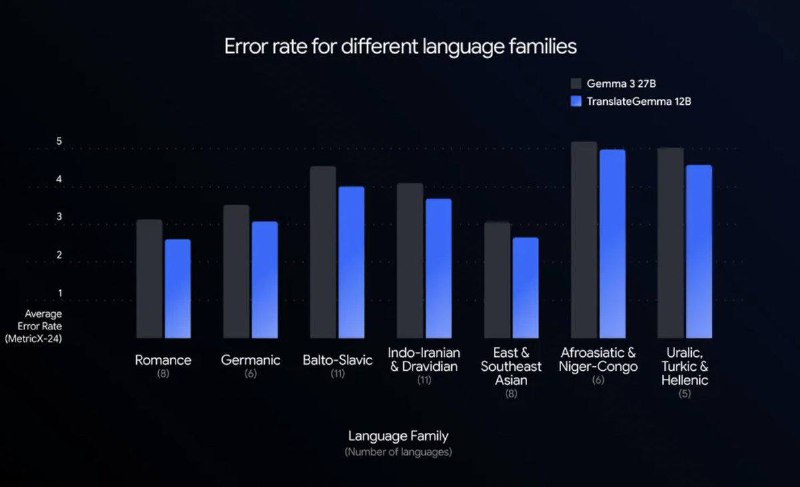

- Impressive efficiency, the 12B model outperforms the 27B baseline, achieving better quality with less than half the parameters

- Two-stage training process using supervised fine-tuning on human and Gemini-generated translations, plus reinforcement learning for natural-sounding output

- Multimodal capabilities retained, can translate text within

- New open translation models built on Gemma 3, available in 4B, 12B, and 27B parameter sizes

- Supports 55 languages with training on nearly 500 additional language pairs for further research

- Impressive efficiency, the 12B model outperforms the 27B baseline, achieving better quality with less than half the parameters

- Two-stage training process using supervised fine-tuning on human and Gemini-generated translations, plus reinforcement learning for natural-sounding output

- Multimodal capabilities retained, can translate text within