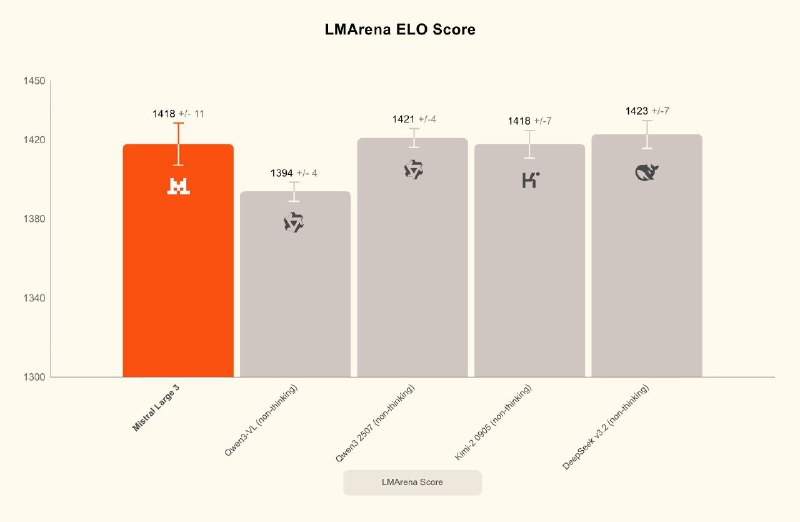

🔥 Mistral unveils its third-generation open multimodal, multilingual model family

Mistral has launched Mistral 3, a full family of open-weight models: Ministral 3B, 8B, 14B and the flagship Mistral Large 3 MoE (41B active / 675B total parameters). All models are released under Apache 2.0 and support images, multiple languages, and efficient deployment even on edge devices.

The Ministral models come in base, instruct, and reasoning versions. Instruct models match competitors with fewer tokens, while reasoning models deliver strong math performance. Mistral Large 3, trained on 3,000 NVIDIA H20

Mistral has launched Mistral 3, a full family of open-weight models: Ministral 3B, 8B, 14B and the flagship Mistral Large 3 MoE (41B active / 675B total parameters). All models are released under Apache 2.0 and support images, multiple languages, and efficient deployment even on edge devices.

The Ministral models come in base, instruct, and reasoning versions. Instruct models match competitors with fewer tokens, while reasoning models deliver strong math performance. Mistral Large 3, trained on 3,000 NVIDIA H20